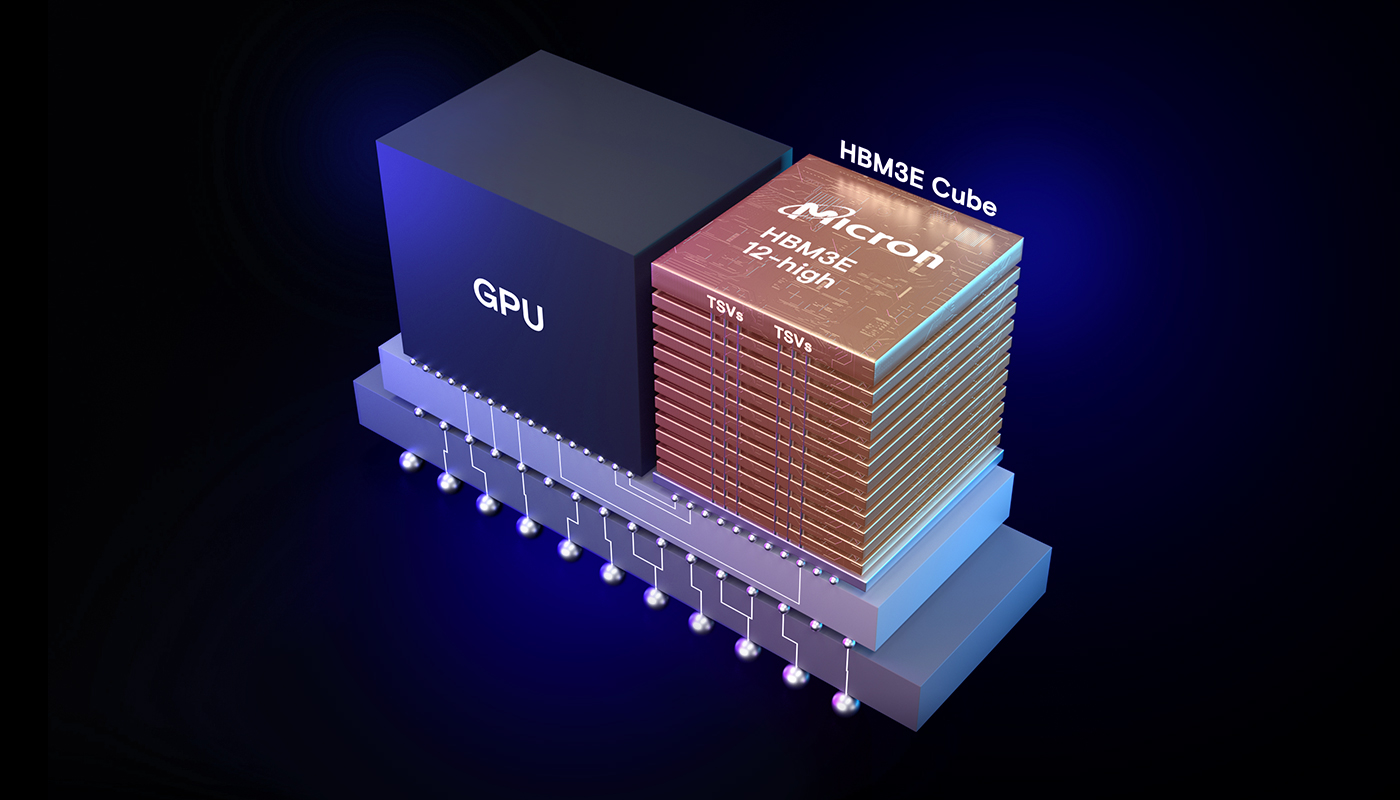

Now shipping with NVIDIA H200 Tensor Core GPUs

This white paper explores the impact of new technologies on memory providers and asserts that advanced technologies such as high bandwidth memory (HBM) are fundamentally altering the industry landscape. Instead of being relegated to lower-margin commodity status, memory companies are now enjoying an elevated role in the critical supply chain for AI.

Complete the fields below and select your preferences to stay connected.

Micron’s comprehensive product portfolio is designed to meet diverse customer needs, focusing on performance, capacity and power efficiency. Higher bandwidth and speeds unlock the full potential of CPUs, GPUs or TPUs, while reliability and fault tolerance prevent setbacks during data training. With more memory capacity, Micron products handle massive data files, enhancing GPU performance and scalability.

Watch Raj Narasimhan, SVP and GM of Micron’s compute network business unit show how Micron’s cutting-edge solutions unlock the future of computing. Our products are designed to deliver unparalleled performance, reliability and efficiency.

Micron’s new HBM3E 12-high 36GB memory is a game-changer for your AI workloads, offering 50% more capacity and significantly lower power consumption than competitors. Now shipping to key industry partners, this memory solution is set to meet the growing demands of your AI infrastructure. Continue reading to discover how Micron’s collaboration with TSMC further strengthens its position in the market, paving the way for future advancements in your AI-enabled data center.

Sanjay Mehrotra, president and chief executive officer, Micron

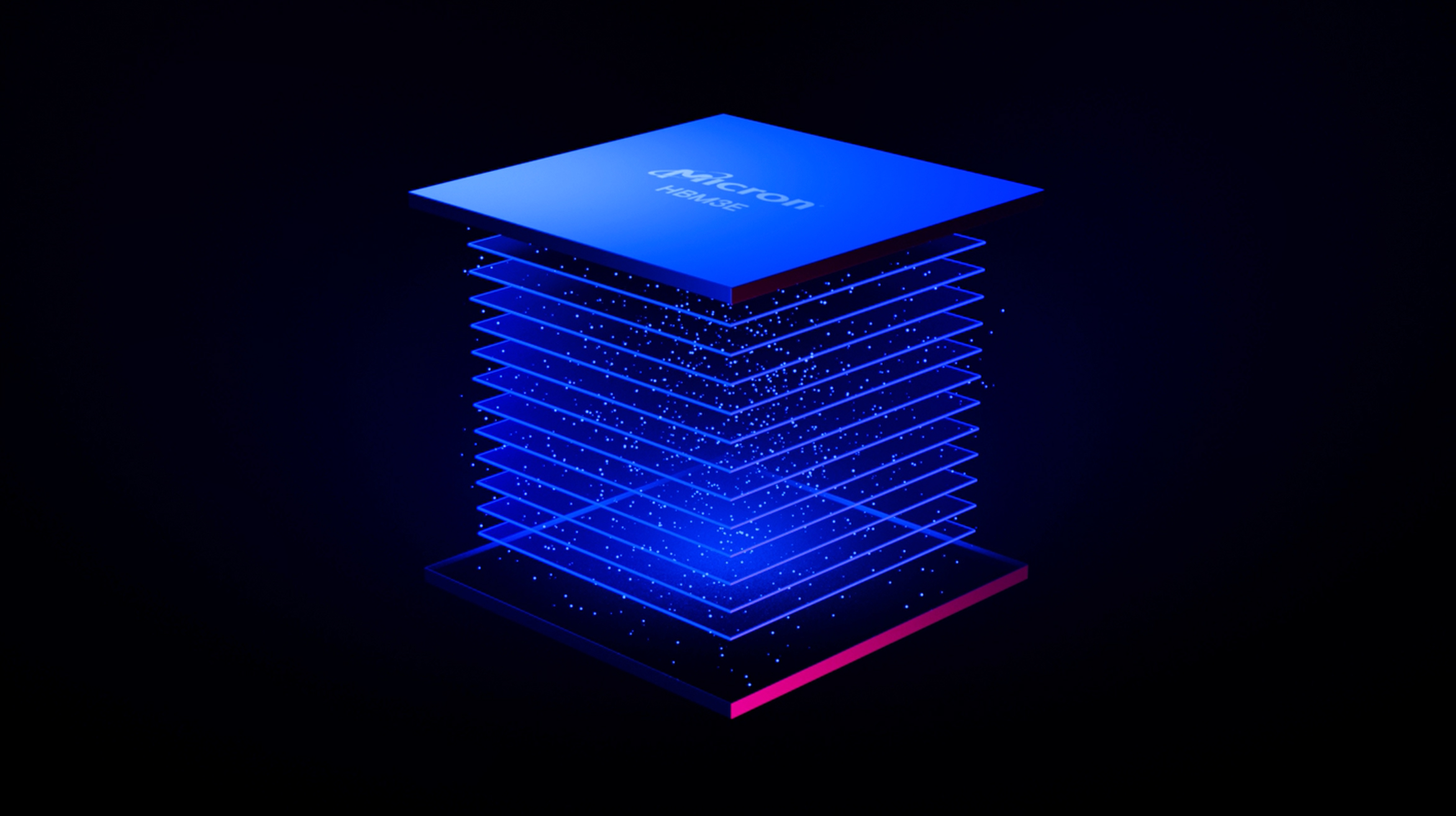

Micron HBM3E is the most energy-efficient memory technology for AI needs, as measured by picojoules (pJ) per bit, with up to 30% lower power consumption than the competition.

To support AI's increasing data demands, HBM3E offers maximum throughput with the lowest power consumption to improve important data center operational expense metrics.

Micron HBM3E is the fastest, highest-capacity high-bandwidth memory to advance generative AI innovation — offered in 8-high, 24GB and 12-high, 36GB cubes — delivering over 1.2 TB/s bandwidth and superior power efficiency, all built on Micron’s leading 1β process node technology.